We Built Rotector and It's Finally Ready

Nine months ago, I got fed up with seeing dangerous people slip through the cracks on Roblox and decided to do something about it. Today, Rotector is live, and I'm pretty excited about what we managed to build.

Here's What We Found

Roblox is supposed to be for kids, but there are people using it for bad things. Community groups try to catch them, but there are millions of users every day. A lot gets missed.

So far, our system found 122 inappropriate groups, and disturbingly, 28 of them have more normal users than flagged ones. These spaces blend in really well and trick innocent players into joining.

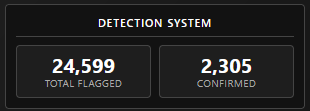

The scary part? We have no idea how many bad actors are actually on Roblox. Could be 100,000. Could be way more. Our small team found over 24,000 in just a few days. Makes you wonder what else is out there.

Scrolling through our system's database of flagged inappropriate groups (censored for safety)

Scrolling through our system's database of flagged inappropriate groups (censored for safety)

How We Built This System

Rotector started as a messy prototype nine months ago. It kind of worked but flagged way too many innocent people. We kept tweaking it, learning from mistakes, until that rough experiment turned into something that actually works.

In just three days of testing, we flagged over 24,000 users and confirmed more than 2,000 of them. Each review only takes seconds to a few minutes.

It took a lot of trial and error, but we figured out how to make something useful.

Why Current Solutions Aren't Enough

Roblox's Moderation

Roblox has moderators, but they're overwhelmed. Of the 24,000 users we flagged, only 904 got banned by Roblox. That's less than 4% of what we found.

This isn't entirely Roblox's fault. They're dealing with millions of users and reports every day. They can only act after someone reports something, and by then it might be too late.

They mostly focus on chat moderation and scanning uploaded content. But dangerous people don't always say bad things in chat. They can still cause harm without triggering these filters.

Community Groups

Groups like MFD (Moderation for Dummies) do amazing work filling the gaps. These people don't get nearly enough credit for what they do.

MFD manually checks suspicious users and warns the community. When they find someone bad, they document everything and spread the word.

But there's a big problem: they can't scale. Even the most dedicated volunteers can only check so many profiles per day.

Plus, constantly looking at inappropriate content burns people out. It's emotionally draining work that's hard to keep up long-term.

What Makes Rotector Different

We took a different approach. Instead of replacing human reviewers, we wanted to help them work faster.

Our AI scans thousands of profiles every hour and flags the suspicious ones. It doesn't get tired or overwhelmed, and it catches patterns humans might miss.

A real person still reviews every flag. The AI doesn't ban anyone or make final decisions. Think of it like having someone tap you on the shoulder and say "hey, this profile looks suspicious." Then a human reviewer checks the evidence and decides what to do.

The Speed Difference

Here's the thing. checking 100 suspicious users manually takes most of a day. With Rotector, it takes less than an hour.

Think about what a human reviewer normally has to do. Look at the profile, scroll through pages of outfits, check their friends, see what groups they're in, then write up why they flagged them. That's easily 5-10 minutes per user if you're being thorough.

Traditional Approach:

| Task | Junior Review | Reason Writing | Senior Review | Total Time |

|---|---|---|---|---|

| Profile Analysis | 30-45 minutes | 35-50 minutes | 20-30 minutes | 85-125 minutes |

| Outfit Analysis | 60-90 minutes | 60-80 minutes | 50-70 minutes | 170-240 minutes |

| Friend Analysis | 40-60 minutes | 45-65 minutes | 25-40 minutes | 110-165 minutes |

| Group Analysis | 25-35 minutes | 30-40 minutes | 18-25 minutes | 73-100 minutes |

Rotector Approach:

| Task | Junior Review (AI) | Reason Writing (AI) | Senior Review | Total Time |

|---|---|---|---|---|

| Profile Analysis | 5 seconds | 8 seconds | 15-25 minutes | 15-25 minutes |

| Outfit Analysis | 30 seconds | 4 seconds | 35-45 minutes | 35-45 minutes |

| Friend Analysis | <1 second | 4 seconds | 20-35 minutes | 20-35 minutes |

| Group Analysis | <1 second | 4 seconds | 15-20 minutes | 15-20 minutes |

Our AI does all that initial work in seconds. It scans the profile, checks all the outfits, looks at friends and groups, then writes up why it flagged them. A human reviewer just needs to verify the AI's work and make the final call.

Instead of spending 10 minutes investigating one user, a reviewer can verify 5+ users in that same time. The AI also writes consistent explanations, unlike humans who might describe the same issue differently each time.

The cost? $20 for every 10,000 users we flag.

Every hour saved means more kids protected. If traditional methods take all day to process what we can do in an hour, that's 23 extra hours we could spend finding new threats.

How It Works

We built different parts to look for different problems. One part scans friend networks, another checks groups, and so on.

Each part can process up to 48,000 users every 24 hours. That might not sound crazy fast, but think about what's happening. We're grabbing every piece of public info from a user's profile and checking it all.

The AI looks for stuff that would be nearly impossible for humans to catch at this scale. Suspicious friend networks, weird group patterns, inappropriate outfits. Imagine manually checking outfits for thousands of users. That would take weeks. For the AI, it's just another thing to analyze.

If you want to see how it works, we have some interactive simulations on our homepage.

What We Check

We look at everything public on a user's Roblox profile.

- Profile info like usernames, display names, and bios. Sometimes innocent things become concerning when you see the pattern.

- Who they're friends with matters a lot. If someone's friends list is full of flagged users, that's suspicious. We also check what groups they're in and how active they are in sketchy communities.

- Outfit checking is where we really shine. Think about manually checking outfits for 100 users who each have 10 pages of clothing. That's 1,000 pages to scroll through. Would take days.

Our AI scans all those outfits in seconds. It catches stuff like bypassed fetishwear, inappropriate swimwear, see-through clothing, and other things that slip past Roblox's filters. Stuff that would be impossible to catch manually at scale.

Current avatars of users flagged by our system for inappropriate outfits

Current avatars of users flagged by our system for inappropriate outfits

You might be wondering why some of these avatars look totally normal. That's because these are their current outfits right now. But you don't really know someone until you check their outfits and inventory. Someone could have lots of inappropriate clothing but swap to something innocent whenever they want.

This is why we're so much faster. What takes human moderators hours or days, we handle in minutes. And we catch stuff they'd probably miss.

Try the Browser Extension

The browser extension puts Rotector right in your browser. You'll see safety warnings next to flagged users anywhere on Roblox. Home page, friends lists, group pages, everywhere. It's like having a warning system built into the platform.

Found someone suspicious who's not in our system yet? You can submit them to be scanned and reviewed. We have daily limits to keep quality high, and we don't track your browsing.

Submit suspicious users for scanning directly from their profile

Submit suspicious users for scanning directly from their profile

We're not trying to replace groups like MFD. We want to help them work better. When an MFD investigator checks someone out, they can see instantly if we've already flagged them. Saves time and gives more context.

Give It a Try

Rotector works well now, but we're just getting started. What you see today is the foundation. We keep improving the detection and adding features.

Will AI completely replace human moderators? Probably not soon. AI is good at spotting patterns, but humans still need to make the final calls.

Making Roblox safer takes all of us. Every download helps, and every user you submit makes the system better for everyone.

🌐 Download the Chrome Extension | 🦊 Get the Firefox Add-on

Frequently Asked Questions

Is this free?

Yep, completely free. Safety tools should be available to everyone.

What happens when you flag someone?

They show up with warnings in our browser extension. If Roblox or authorities need details about specific cases, we'll cooperate. Parents can use this to check their kid's friends list.

How often are you wrong?

Out of every 100 users we flag, only 1-2 are innocent. So we're right about 98-99% of the time. Plus a human double-checks every flag.

How do parents use this?

Install the extension and check your kid's Roblox friends list. You'll see warnings next to any flagged users in their social circle.

What data do you collect?

Just what we need for the safety system to work. Full details in our privacy policy at rotector.com/privacy.

Who are you and why should I trust this?

I'm jaxron. I built this because I got tired of seeing dangerous people slip through the cracks on Roblox. Lots of community projects tried to solve this but hit technical limitations. I thought it was impossible for one person to tackle, but I started in September 2024 and kept building.

Is Roblox involved?

Nope, we're completely independent. Given Roblox's moderation track record, they probably wouldn't support this anyway.

Why not just report users normally?

Bad actors are good at hiding what they're doing. Our extension shows you warnings instantly instead of making you guess who might be dangerous.

What if you flag someone innocent?

We'll clear them from the system during our review process. We're also building an appeals system for people who think we made a mistake.

Questions about Rotector? Join our Discord community. Together, we are building a safer internet.